GRASS GIS at ICC 2017: Difference between revisions

⚠️Wenzeslaus (talk | contribs) |

⚠️Wenzeslaus (talk | contribs) (add workshop image) |

||

| Line 20: | Line 20: | ||

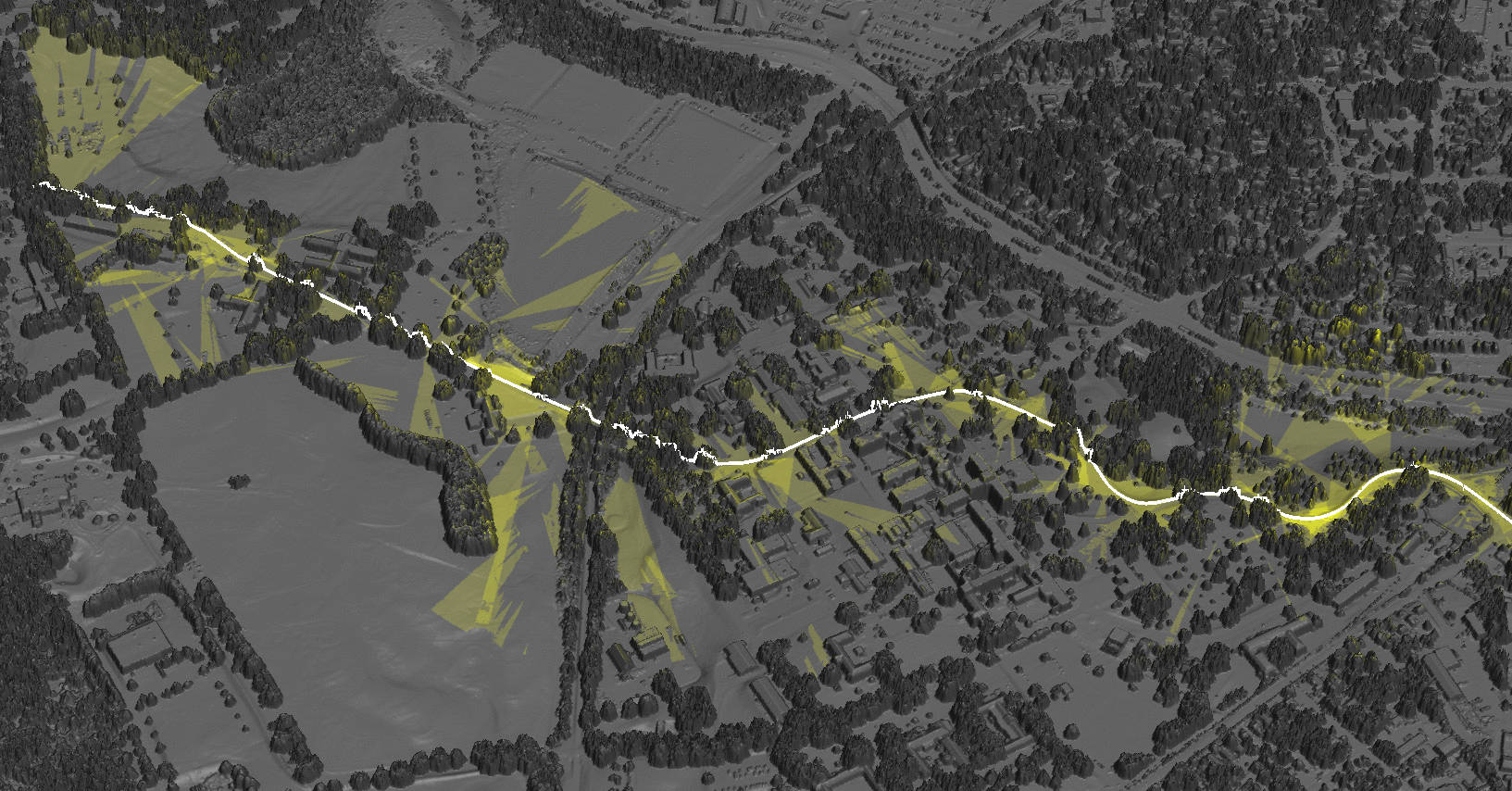

=== Analytical data visualizations with GRASS GIS and Blender === | === Analytical data visualizations with GRASS GIS and Blender === | ||

[[File:Cumulative viewshed.png|300px|thumb|right|''Analytical data visualizations with GRASS GIS and Blender:'' Cumulative viewshed computed from views along a road on DSM (3D view in GRASS GIS)]] | |||

by Vaclav Petras, Anna Petrasova, Payam Tabrizian, Brendan Harmon, and Helena Mitasova from North Carolina State University | by Vaclav Petras, Anna Petrasova, Payam Tabrizian, Brendan Harmon, and Helena Mitasova from North Carolina State University | ||

Revision as of 21:46, 22 June 2017

28th International Cartographic Conference of the International Cartographic Association, July 1-7, 2017, Washington, DC, USA, http://icc2017.org

Workshop

All the following sessions are part of a workshop titled Spatial data infrastructures, standards, open source and open data for geospatial (SDI-Open 2017) jointly organized by the Commission on Open Source Geospatial Technologies and the Commission on SDI and Standards (http://sdistandards.icaci.org/).

- Description: Spatial data infrastructures aim to make spatial data findable, accessible and usable. Open source software and open data portals help to make this possible. In this workshop, participants will be introduced to SDIs, standards, open source and open data in a fun way. The workshop comprises invited presentations to provide an introduction and background to SDIs, standards, open source and open data. A number of hands-on introductions to open source geospatial software together with mapping tasks will give participants the opportunity to explore these tools.

- Date: 1-2 July 2017

- Place: George Washington University, Washington, DC, United States

OSGeo Live introduction

by Vaclav Petras from North Carolina State University

- Format: Talk

Analytical data visualizations with GRASS GIS and Blender

by Vaclav Petras, Anna Petrasova, Payam Tabrizian, Brendan Harmon, and Helena Mitasova from North Carolina State University

- Format: Talk and demonstration

- Materials: Analytical data visualizations at ICC 2017

Mapping open data with open source geospatial tools

by Vaclav Petras, Anna Petrasova, Payam Tabrizian, Brendan Harmon, and Helena Mitasova from North Carolina State University

- Description: Participants interactively visualize open data and design maps with open source geospatial tools including Tangible Landscape system

- Format: Hands-on

- Materials: Analytical data visualizations at ICC 2017

Presentations

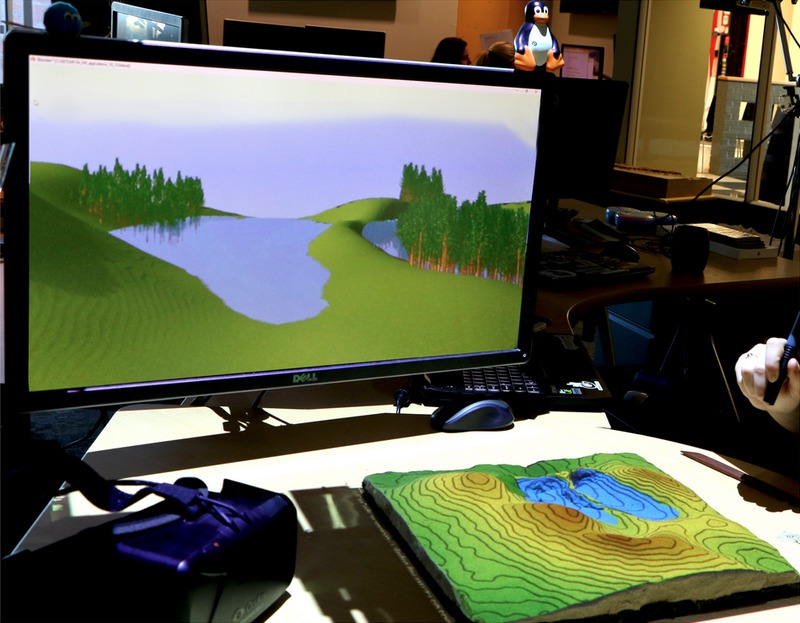

Immersive Tangible Modelling with Geospatial Data

by Payam Tabrizian, Brendan Harmon, Anna Petrasova, Vaclav Petras, Helena Mitasova, and Ross Meentemeyer from North Carolina State University

- Software used: GRASS GIS, Blender, Tangible Landscape

- Extended abstract:

- Recently, the importance of integrating human perceptions and preferences in geospatial modeling has been broadly acknowledged. Therefore, a new type of interfaces is needed to enable experts and non-experts to collaboratively model landscapes and explore the impacts of “what if” scenarios on both environmental and experiential dimensions. Separate modes of representation, however, are needed for more effective communication of these two dimensions. While perceptions of landscapes are best represented through photorealistic renderings viewed at human-scale perspectives, geospatial simulations are best represented more abstractly to enhance readability.

- We propose a framework, Immersive Tangible Geospatial Modelling, to couple tangible interaction and simultaneous representation of realistic perspectives and 3D augmented geospatial analytics. It leverages natural interaction of Tangible User Interfaces (TUI) and realistic representation offered by Immersive Virtual Environments (IVE). The framework is implemented using Tangible Landscape, a collaborative tangible user interface for GIS that allows multiple users to reshape a tangible model of the landscape by hand and receive real-time, projection augmented feedback of geospatial simulation. We paired Tangible landscape with state-of-the-art 3D modeling and rendering software to allow real-time high-definition rendering at the viewport which can be projected as stereoscopic images into head-mounted displays. We programmed the 3D content of the virtual landscape to become adaptive in a way that each element of the 3D world has specific agency and behavior which is linked to that of the corresponding tangible object. Now, instead of typical cartographic elements and symbols, users interact with tangible objects and - in near real-time- perceive 3D augmented geospatial simulation on the tangible model, and realistic renderings of human-scale views rendered on a computer display, and head-mounted displays.

- In the first layer of framework’s architecture, different modes of interaction (e.g, sculpting the terrain with hand, adding felt pieces, adding and replacing symbolic trees, tracing large vegetated surfaces patches or water bodies with laser pointer) with tangible objects (e.g, Kinetic sand, wooden place-markers, symbolic foam trees, felt pieces) are translated into basic geospatial features (points, patches, continuous surfaces). Using GRASS GIS, an open source GIS platform, these data are used to parametrize geospatial simulations (e.g. water flow, sedimentation, erosion, least-cost-path) which are then projected back on the tangible model. An open source 3D modeling and rendering pipeline (i.e. Blender) is loosely coupled with GRASS GIS to update composition (location, size, pattern, texture) and vision (camera position, viewing direction) parameters of a geo-referenced 3D semiotic model, based on corresponding tangible objects. In Blender, the shading parameters such as ambient occlusion, ambient lighting, anti-aliasing and raytrace shadows are set-up (OpenGL shading) to support real-time viewport rendering. Using an open-source API for immersive technology (i.e., OpenHMD), the viewport is projected in a head-mounted display with built-in head tracking (Oculus Rift DK2 or CV1).

- In this talk, we will first provide an overview of the TUIs, IVEs and adaptive 3D modeling and the rationale behind our proposed framework. Then, we will describe the framework’s architecture and the workflow for transforming the interaction with tangible objects to realistic representation of landscape features. We demonstrate the framework’s implementation with a collaborative landscape design case study in which users explore optimal solutions between aesthetic (e.g., viewsheds and vantage points) and ecological dimensions (e.g., waterflow).

Using space-time cube for visualization and analysis of active transportation patterns derived from public webcams

by Anna Petrasova, Aaron Hipp, and Helena Mitasova from North Carolina State University

- Software used: Jupyter, Python, Matplotlib, GRASS GIS, Paraview

- Extended abstract:

- Introduction: Active transportation such as walking has been shown to provide many benefits from improved health to reduced traffic problems. Adopting active transportation behavior by citizens is influenced by the built environment and consequently, better understanding the link between them would allow urban planners to more effectively design public spaces and transportation networks. AMOS (The Archive of Many Outdoor Scenes) is a database of images captured by publicly available webcams since 2006, providing an unprecedented time series of data about the dynamics of public spaces. Previous studies have demonstrated that using webcams together with crowdsourcing platform, such as Amazon Mechanical Turk (MTurk), to locate pedestrians in the captured images is a promising technique for analyzing active transportation behavior (Hipp et al., 2016). However, it is challenging to efficiently extract relevant information, such as geospatial patterns of pedestrian density, and visualize it in a meaningful way that can inform urban design or decision making. In this study we propose using a space-time cube (STC) representation of active transportation data to analyze the behavior patterns of pedestrians in public spaces. While STC has already been used to visualize urban dynamics, this is the first study analyzing the evolution of pedestrian density based on crowdsourced time-series of pedestrian occurrences captured by webcam images.

- Methods: We compiled webcam images capturing public squares of towns and cities in several locations around the world during a period of two weeks. We tasked MTurk workers to annotate pedestrian locations in the webcam images. Pedestrian locations referenced with the image coordinate system are not a suitable representation for further spatial analysis, since we cannot measure distances or incorporate relevant geospatial data in our analysis. Therefore we used projective transformation to georeference the locations, matching stable features in the webcam images to identical features in an orthophoto. To analyze the pedestrian behavior throughout a day, we temporally aggregated the collected data and represented the new dataset in a space-time cube, where X and Y coordinates are the georeferenced positions of pedestrians and Z is the time of day extracted from the webcam images. From the three-dimensional point dataset we created a voxel model by estimating the spatio-temporal density of pedestrians using trivariate kernel density estimation.

- Results: We analyzed scenes of plazas in several cities revealing different spatio-temporal patterns. One of the studied plazas was analyzed before and after it has been redesigned, allowing us to directly study the effect of changes to the built environment on pedestrian behavior. We interactively visualized the spatio-temporal patterns of pedestrian densities using isosurfaces for given densities of interest. The shape of an isosurface shows the spatio-temporal evolution of pedestrian density. A time axis is represented as a color ramp draped over the isosurface, facilitating an understanding of the relationship between the time and shape of the isosurface. To assess the impact of the redesign on the pedestrian movement across the studied plaza we have computed and visualized a voxel model representing the difference between the pedestrian density before and after the change. The model suggests that a more complex spatio-temporal pattern of behavior developed after the change.

- Conclusion: By representing pedestrian density as a georeferenced STC, we were able to generate visualizations that represent complex, spatio-temporal information in a concise, readable way. By harnessing the power of crowdsourced and open data, this study opens new possibilities for the analysis of the dynamics of public spaces within their geospatial context.

- References: Hipp, J. A., Manteiga, A., Burgess, A., Stylianou, A., & Pless, R. (2016). Webcams, crowdsourcing, and enhanced crosswalks: Developing a novel Method to analyze active Transportation. Frontiers in Public Health, 4(97).